Lars TCF Holdhus | Data Awareness

Keywords: A.I., Alex Iadarola, algorithms, Artificial Intelligence, big data, bots, data, decryption, encryption, greek protests, IBM, music, personal data, Software, storage, surveillance, TCF, Technology

Alexander Iadarola interviews TCF about his algorithmically based practices

“More often than not,” the interview-ready TCFX bot suggests to me during one of our several communications, “art seems to indulge in the illusion of an autonomous discourse, ignoring the multiple connections between knowledge and power.” Dealing with the TCF system in any of its manifestations can be an uncomfortable experience: the more time you spend with it, the more you realize it’s directly tinkering with the processes of its own consumption, and accordingly, with you. Sometimes it feels invasive, while other times it feels revelatory. Either way, the rabbit hole only deepens as you research the human agent behind the project, Lars Holdhus (legal name Aedrhlsomrs Othryutupt Lauecehrofn, an anagram), learning about his interest in intervening with machine-human relationships via A.I., algorithmic composition, and cryptography.

Lars’ practice, which also includes visual art and lectures on subjects like alternative currency and the music economy, is on a fundamental level concerned with the relationship between knowledge and power, as his bot suggests. Those terms are hard to pin down in any circumstance, but they prove particularly evasive within his register—fittingly, their most obvious expression is through TCF’s various levels of encryption, a process Lars tells me “can be seen as a game of knowledge.”

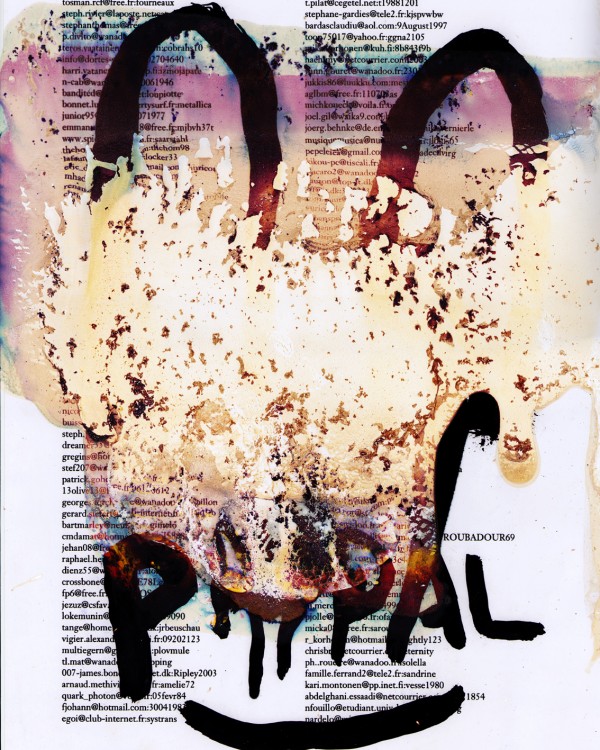

It starts with his track titles, like “D7 08 2A 8D 2A 37 FA FE 17 0E 62 39 06 81 C8 A1 49 30 6F ED 56 AD 5E 04,” which read as MD5 checksums that don’t yield easy translation. Going further, though, you find music researcher and composer Guy Birkin’s blog Aesthetic Complexity, which recently featured a post on spectrogram tests of Holdhus’ music. The results are amazing if you’ve spent any amount of time baffled by the logic of TCF’s compositions: Birkin found that the first track off his recent EP for Liberation Technologies yields an image of erupting violence from the 2011 Greek protests, a message simultaneously provocative and ambiguous, suggesting engagement with a degree of ideological remove. The revelation of course carries with it the implication that there are many more possible decryptions of TCF’s polysemous relationship with the world around it.

Holdhus is always finding ways to reach out of his work’s frame. Sometimes the legality of its moves can be blurry, particularly when you wonder how he gets the sheer breadth of valuable data his work contains. At other times, it’s not remotely blurry: Lars shares with me that some of his first artworks were made with credit card information harvested online, and in 2011 “presented to an audience that did not know what these codes meant. The piece was illegal when it was made but no one noticed the illegality of the piece or what it was suppose to mean. I’ve done all these different tests as an attempt to understand what the limitations of art are.”

And while his tests usually, if not always, have something to do with A.I.—for instance in his recent sonification of Craig Reynolds’ Boids software which Nora N. Khan, Laura Greig and I tried our hands at interpreting over at Rhizome—they are also very often invested in administering data directly regarding people. In the context of a TCF work, it can be troubling to look at people’s data, coming partially censored or encrypted, as it can only be read through a heavily mediated and partially obstructed empathy. The work thus feels like it contains an element of mimesis, reflecting the viewer or listener back to themselves, but it’s discomfortingly unclear what’s being reflected, or through what processes.

It is odd to feel like you’re explicitly part of a test: when “slow” spikes into your listening during the first track of TCF’s Liberation Technologies EP—in another time and place, TCFX tells us that “slow movements were trying to avoid the engagement of objects into the practice that is most often employed when too much attention is given to human action”—it can feel like a playful challenge to your interpretive skills, or it can be read as a prescription calibrated to the listener. In general, it’s unclear exactly what the TCF system is up to—as if it were ever up to only one thing—but we can rest assured that Holdhus will be there to measure results after the experiment, somehow.

What follows below is a conversation with Holdus about his practice and large datasets.

Alexander Iadarola: You’ve mentioned previously that you are skeptical of claims that big data marks a paradigm shift in the history of information. Could you say more about that? What do you see as the guidance history could give us on big data?

Big data is often referred to as the 3 V’s, volume, variety and velocity. According to IBM, 2.5 Exabytes of data was generated every day in 2012.1 If one considers the rise of data to be exponential there’s no paradigm shift in the data itself.

From the beginning of computational knowledge2 and throughout the history of data storage3 we can see that as soon as it was possible to use measurements through collected data they were taken into use. The Hollerith tabulating machine (punch cards) marked the beginning of collecting data in larger amounts. It was widely used in the military and finance sector and was later used for legal documents (government checks). In 1941 the newspaper Lawton Constitution used the term “Information explosion” to describe the effects that punch cards had on the society. It referred to a meeting at Georgia tech in 1963 where Dr. Wernher von Braun stated that all human knowledge accumulated since the beginning of time doubled between the years 1750 and 1900. This amount of knowledge doubled again between 1900 and 1950 and redoubled between 1950 and 1960. He predicted that the amount of such knowledge would again double between 1960 and 19674.

Data is closely connected to knowledge. These two factors have been used throughout history to gain political power. The library of Alexandria was one of many institutions that became a symbol of power for the Ptolemaic dynasty in competition with the kingdom of the Attalids in Pergamum.5 They were competing for the prominence in the Greek world and one way to do that was through building institutions and “data” storage.

But what about the immense storage possibilities? It seems like the notion of the archive is undergoing a shift.

Data storage is also rising exponentially. Here’s IDC outlook on big data until 20206. They predict that from 2005 to 2020 it will grow by a factor of 300 from 130 Exabytes to 40000 Exabytes (5200 Gb per individual on planet earth).

They also outline that in 2020 33% of all data will contain information that might be valuable if analyzed. Another interesting aspect of this report claims that there’s far less information created by individuals themselves than information about them.

There’s also new research that hints in this direction. Harvard has managed to store 700 terabytes of data into a single gram[7] of DNA and HP is developing a new computer they call “the machine”8 and it’s based on memristor technology. HP’s development will drop energy consumption drastically and also limit the amount of storage units drastically.

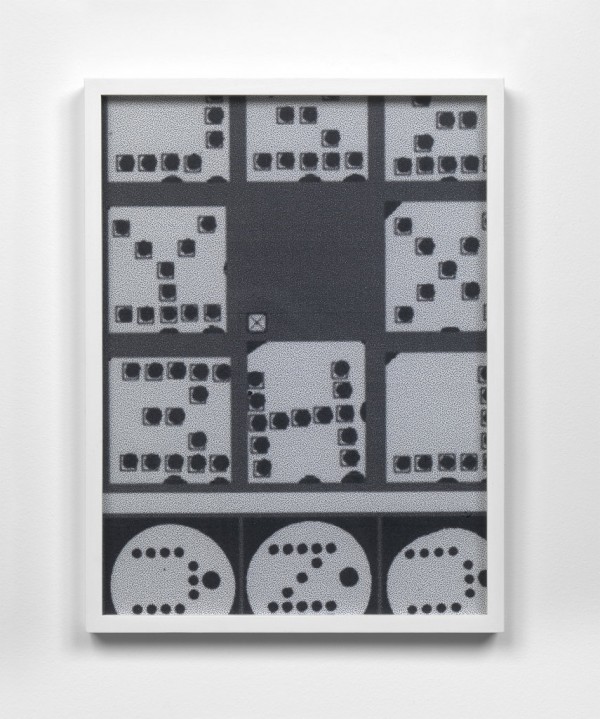

Taking into account the graph below and Moore’s law, let’s see where things develop.

Guy Birkin recently decoded your track “97 EF 9C 12 87 06 57 D8 B3 2F 0B 11 21 C7 B2 97 77 91 26 48 27 0E 5D 74” via a spectrogram to reveal an image of the protests in Greece in 2011. How might you see this kind of political disruption in regards to big data, in terms of the fight to secure data and privacy?

The Clipper chip9 was one of the first known attempts of access through a backdoor built into an “encryption device”. It was advertised by the NSA to be adopted by telecommunication companies so that the NSA could access private communication. Another famous attempt from NSA was to build a backdoor into Lotus Notes10. Lotus then tried to gain more government clients to use the exploit. Since that time we’ve seen many attempts by governments accessing private communication and data. The methods they use reminds me more of black hat11 hacking than anything else. The use of botnets, trojans and 0day exploits for government activities displays a certain desperation to control the web.

There’s two sides of this story, data encryption can protect important civil liberties, but it also poses a certain threat to public safety. This is a difficult debate where one can draw the line between what is needed to be accessed for law enforcement and what is private. In the age of big data this has become increasingly hard to control and new laws and regulations takes time to reform. Technological advancement is often faster than reforming laws and regulation. That has led to an explosion in grey area activity such as synthetic drugs. We might reach a point where it is impossible to regulate drug distribution and other illegal activities. Before that time, there will be numerous attempts from law enforcement to shut this activity down in the form of increased control over postal ways, increased surveillance, hacking of TOR12 and use of scare tactics through severe prison sentences for hackers and people involved in drug trafficking online.

Can you say more about how you see governments going after private info and data as little more than black hat hacking?

It’s only some of their methods that remind me of black hat tactics. Their intentions are on a different plane. Software and computers are being used in cyber attacks in an increasing rate and the damage cyber weapons can do is already quite significant. Stuxnet is a good example of that. It was developed by the US and Israel to shut down the nuclear centrifuges in Iran. While it succeeded at that, it also infected more than 50,000 computers that had nothing to do with this attack. In other words, 50,000 computers turned into carriers of a weapon to destroy the nuclear centrifuges. Big data has also been used to execute similar attacks. NSA has been identifying targets based on metadata analysis and cellphone tracking technologies and then sent a drone to kill the target.13

Since our infrastructure is built on fragile computer systems full of flaws the amount of knowledge you need to execute a minor attack (DDOS and exploits etc.) is fairly small. Governments have always been interested in taking out dissidents. It’s nothing new that governments have access to large amounts of information so the most interesting question for me is what is a healthy balance of power.

How do the realities we’re discussing affect your practice? Do you in any way conceive of your work as a form of resistance to these “evil” approaches to collecting and storing big data? I think of the role encryption plays in your work.

It’s hard to say if these practices are evil. It’s evil for some and for others with a different political idea they would argue it’s necessary. I do not take the approach of being in resistance as I question the results of previous historical events related to opposition. Most people have little or no understanding of cryptography and its history. My interest is simply to get people engaged with deeper structures of our society instead of the simple interfaces that most of us are exposed to (Facebook, Google etc). What kind of opinion they will form after seeking that knowledge is not in my control. Encryption can be seen as a game of knowledge and a challenge to seek out the clues to what something means or represents. This is something that Guy Birkin understood and used to decipher the last track on 415C47197F78E811FEEB7862288306EC4137FD4EC3DED8B. It changed the meaning of that piece and opened up for another interpretation of the work. One could say that it was a contribution to TCF.

Are you also skeptical of the results of previous historical attempts at artistic resistance?

If you analyze the history of art and research the intentions of the artists versus the actual outcome from resistance I start to question the success of artistic resistance. Political resistance often ends up in a classical Marxist scenario which for example Ray Brassier has pointed out some of the issues with14. I have more faith in guidance and navigation when it comes to art and its function in our society.

How do you envision people “plugging in” to your work? Compared to, say, plugging in to a Robert Smithson piece, which involves an emphasis on ambiguous and fleeting kinds of perception and spatialized embodiment. Engaging with TCF seems to involve a different set of fundamental parameters. What’s an ideal listener exchange with a TCF track for you?

There’s no ideal listener exchange as I do not want to dictate how people should experience something. Some people have described their LSD experience while listening to TCF and others have climbed mountains while listening to it. Some have a more analytical approach to it and some might be offended by it. I take all these approaches into account when I compose music. Since my practice spans from running a small tea business, making artworks and producing music there’s several entry points. In my latest approach to composition (which will come out on Ekster in the first half of this year) I put myself in the position of a listener more than a composer. By using different algorithmic compositional tools I selected sounds and then composed music based on a larger goal for the whole record. This turned out to be a strange process where I had to force myself to accept certain compositional decisions. The record tries to describe a virtual render of a symphonic work where all instruments are more or less generated. It is partly composed by a machine. I therefore cannot expect too much from a listener as I struggle myself with the process of composing.

Also, am I correct in inferring that encryption is in part a game of knowledge to you? Is your interest in encryption in art comparable to the tradition of “decoding” the meaning of a work by means of interpretation, in the sense of figuring out what a painting or piece “means”?

I’m interested in what artworks can contain and how they change meaning over time. Some of my first artworks were based on credit card information I found online and presented to an audience that did not know what these codes meant. The piece was in the grey zone when it was made, but no one noticed the illegality of the piece or what it was supposed to mean. There was no narrative that spelled out “artist gets credit card information from the dark web and makes artworks out of it”. The artworks were shown in 2011 and that was a time before the general audience in the field of art knew about Tor, the deep web, etc… I’ve done all these different tests as an attempt to understand what the limitations of art can contain.

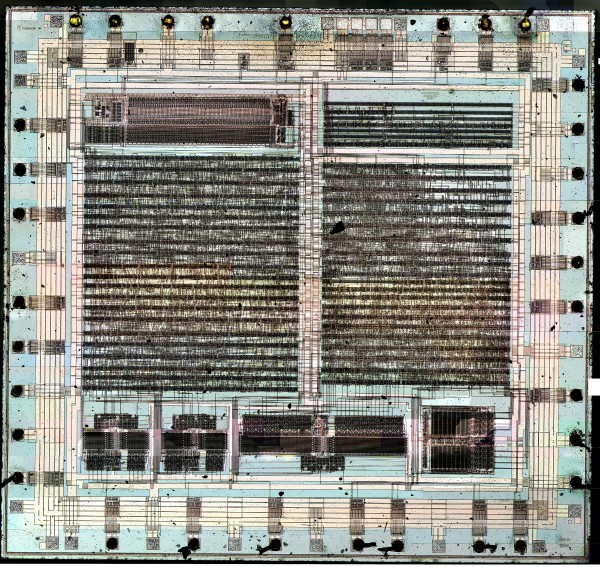

E3 08 42 9E 11 A2 8B AA 6B 4A DA E5 BD 67 34 71 84 9C 3C BE E3 BD D6 4C EA EB 87 91 84 86 81 84, Lambda print, Etching, 2011

How do you see the rise of big data affecting music economies and music production in the near future? I don’t know if you saw this.

It has already affected music through Shazam’s use of big data for example. They claim that they can now predict the next big artist that will break. Content will be shaped by big data and pop music will develop even slower than before. Here’s a great example where I suspect the use of Big Data but also a group of songwriters that collaborate. EDM is also a good example of how streamlined music is becoming.

Before people had large data sets available you had to rely on a smaller amount of data to make similar predictions. Today’s predictions can be more precise and with a higher production turnaround time, which makes it more profitable to follow a certain trend than to invent something new. The problem is that sometimes it’s hard to predict regardless of how much data you have available. These surprises might be less appreciated in this type of calculated economy.

I’m curious about the ways in which you think people might turn to online platforms that don’t allow data collection in order to “protect” themselves from big data. What are practical methods of resisting big data’s more malevolent uses today? What are some ways people could limit their output of data without “going off the grid”?

Legislation and education. I believe it’s possible to limit the possibility for companies/private agencies and law enforcement to use data in ways they do right now. And to end encryption and rebuilding the fundamentals of the internet would also make it more difficult to collect large amounts of data. If it becomes increasingly difficult to collect data, law enforcement will reconsider collecting data in the amounts they currently do. Decentralization of email, cloud storage, social networks will also help. If open source development continues there’s a possibility that there will come viable alternatives to existing services. Unfortunately, people have so far preferred free services over protection of their own data.

1 http://www.bbc.com/news/business-26383058

2 http://www.wolframalpha.com/docs/timeline/

3 https://www.backupify.com/history-of-data-storage/

4 Georgia Tech Alumni Magazine Vol. 42, No. 03, 1963

5 http://www.research.ed.ac.uk/portal/files/11871537/Culture_and_Power_in_Ptolemaic_Egypt_the_Library_and_Museum_at_Alexandria.pdf

6 http://www.emc.com/collateral/analyst-reports/idc-the-digital-universe-in-2020.pdf

7 http://www.extremetech.com/extreme/134672-harvard-cracks-dna-storage-crams-700-terabytes-of-data-into-a-single-gram

8 http://www.hpl.hp.com/research/systems-research/themachine/

9 https://epic.org/crypto/clipper/

10 http://www.heise.de/tp/artikel/2/2898/1.html

11 A black hat” hacker is a hacker who “violates computer security for little reason beyond maliciousness or for personal gain” (Moore, 2005)

12 http://www.wired.com/2014/12/fbi-metasploit-tor/

13 https://firstlook.org/theintercept/2014/02/10/the-nsas-secret-role/

14 http://www.metamute.org/editorial/articles/wandering-abstraction